Apple’s iOS14 update means the disappearance of creative-level data for iOS devices. Rather than granular data, most installs and lower funnel events rely on models. Read on to learn how you can approach creative testing with AI.

Algorithmic Testing: Meta + Google

On Meta: Dynamic Creative Optimization (DCO), DCO tests combinations of creative elements (titles, descriptions, images, etc.) and delivers the optimal combination per subsets of our audience. DCO is set on the ad set level, only allowing for “one” ad (with 625 variations). Dynamic creative optimization is a setting.

Automated App Ads (AAA) is a Facebook campaign type for app installs. Ad & campaign settings allow for a broad audience and machine learning takes the lead. Advertisers provide up to 50 different images and videos with up to 5 headline & primary text variations. AAA is a campaign type.

On the one hand, DCO means less manual creation and more ad variations. On the other hand, AAA doesn’t give in-app event conversion volume (besides Purchase). There is no creative-level data, and the algorithm can be unreliable for down-funnel events as it pushes spend to early upper-funnel winners.

On Google: Responsive Ads. Google has had this mix-and-match approach for a while. Input 5 headlines, 5 descriptions, videos and images and the algorithm does the rest.

To understand audiences, consider running the same ad creative against two or more audiences that are split by ad set. This refers to Google display ads.

Pros: Understand audiences

Cons: Does not move creative learnings

AB testing refers to a direct comparison test with specific learnings in mind. In AB testing, change one element like copy or color.

Pros: Granular learning, clear winner

Cons: Expensive, does not work with iOS install campaigns, unreliable results, cannot return results within the first 2 days, must be split into individual campaigns

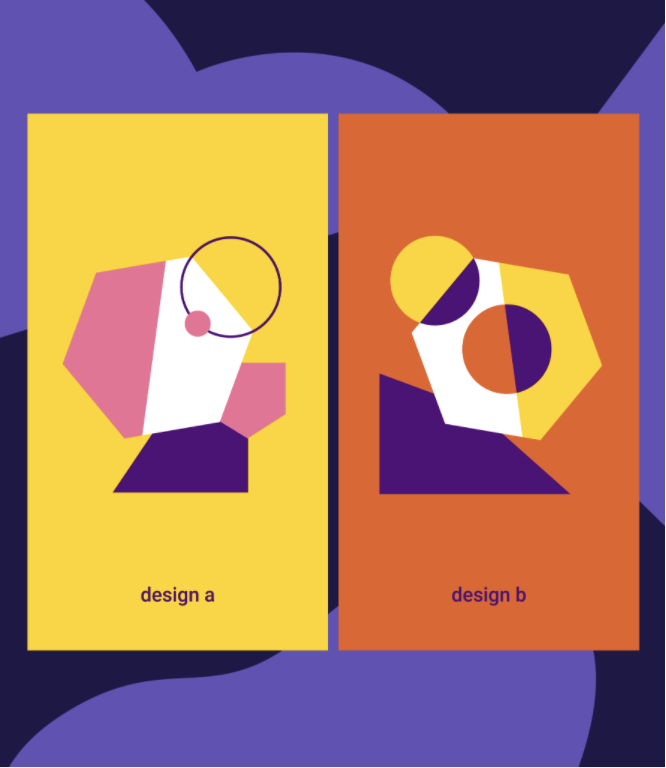

One approach is the open field test, meaning we run multiple ad creatives with various themes concurrently. Think of this like a horse race. The gates open and the fully formed ads run their course. Remember to roll over a past top performer to use as a benchmark.

Pros: Easy to set up, easy to upkeep

Cons: Success can be attributed to many design elements, resets learning

Run several ad-sets with different themes on Google AC. Ex.) If you are testing “value” vs. “variety” for a fitness app, you would compare themed creatives at the ad group level. Note: this is not an option on Meta- they must be split by campaign.

Pros: Get a sense of overall messaging success

Cons: Does not provide granular combinations

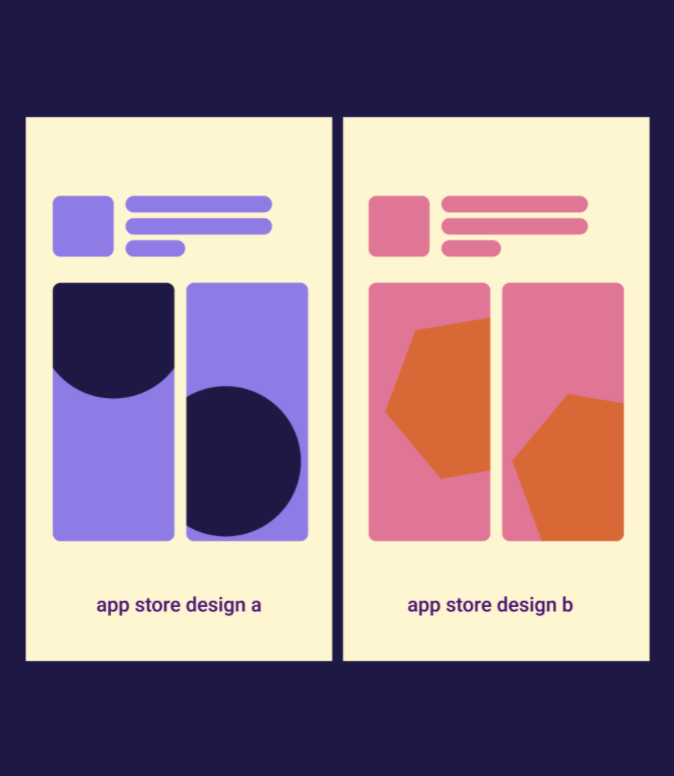

The app store is expanding their testing capabilities via AI for AB testing. Find out more here.

Pros: Apple and Play store now both offer testing, low design-life compared to Custom Product Pages

Cons: Early stages- not reliable

Determining Success

2021 has been a year of growth at Twigeo. We lept over major industry hurdles, onboarded some new exciting clients and finally started to work together in person again.

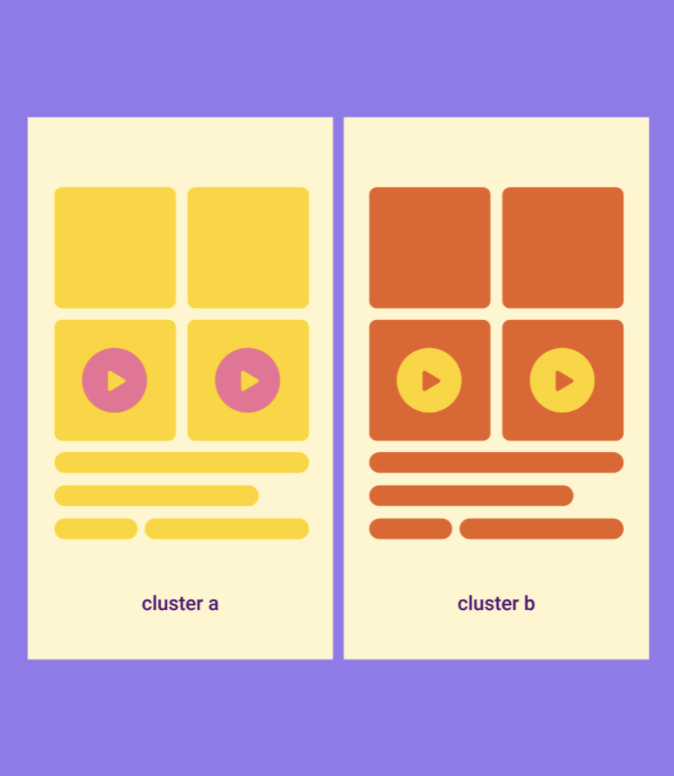

Expectations for evaluating ad performance have changed. Many of Meta’s algorithms are less predictable and can be misleading for lower-funnel marketing objectives. Since we have no ad-level data in AI campaigns, we often rely on the percentage of spend to determine dynamic ad winners. Note: since creative fatigue can still occur, pay attention to channel best practices and refresh creative assets accordingly.

Determining which combinations are driving volume can be difficult. Twigeo has developed a visualization UI that shows which combinations capture the largest percentage of spend. Interested? Reach out to us!